Designing the B2B Advertising Operating System; Audiences Are Dead Data. Signals Are Live Systems.

Why B2B in 2026 Is About Teaching Systems, Not Picking Audiences

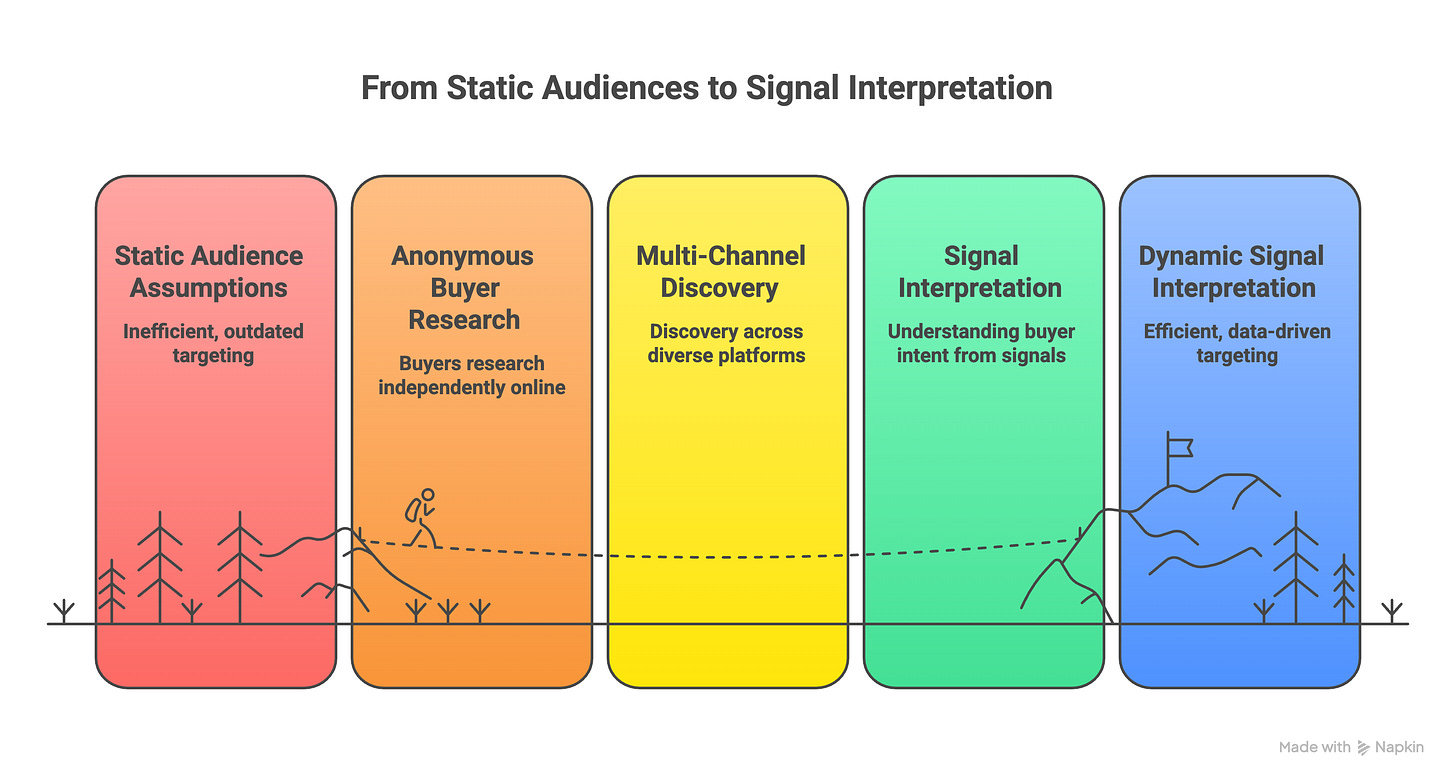

For the last decade, every B2B digital marketing strategy has started with the same question: “Who should we target?”

Which often becomes a harder question to answer then it first appears. The answer often gets formed by running ICP workshops. Or grabbing the old static TAL spreadsheets. Or some forms of guesstimates versus hard science.

This lands us on targeting audiences which equate to segments frozen in time and uploaded into platforms that assume the market politely stands still. Its devoid of dynamism and doesn’t reflect the oscillations in buying behaviour

In 2026, that framing is quietly breaking.

Not because ABM is wrong.

Not because targeting doesn’t matter.

Not because audiences no longer matter, but because they can no longer be decided in advance.

Modern B2B demand doesn’t announce itself neatly. Buyers research anonymously, living in an increasingly dark-internet, they move across surfaces you don’t control, and progress in uneven bursts. Discovery happens through AI answers, dark funnel content, private Slack/Whatsapp groups, CTV exposure, peer conversations, and internal delegation long before sales ever gets a signal.

In that world, static audiences aren’t just inefficient. They’re assumptions, and they are often assumptions based on old data

What scales in 2026 isn’t audience selection, it’s signal interpretation.

From picking audiences to reading behaviour

Here’s the shift most teams haven’t fully internalised yet:

Audiences are no longer the input to B2B strategy.

They’re the output of behaviour, captured by sets of signals (ads engagement scoring, on-site High Value Actions, complex scoring models linked to real buying behaviours)

In previous models, teams decided who mattered first, then looked for evidence later.

In modern B2B, I think you need to invert that logic.

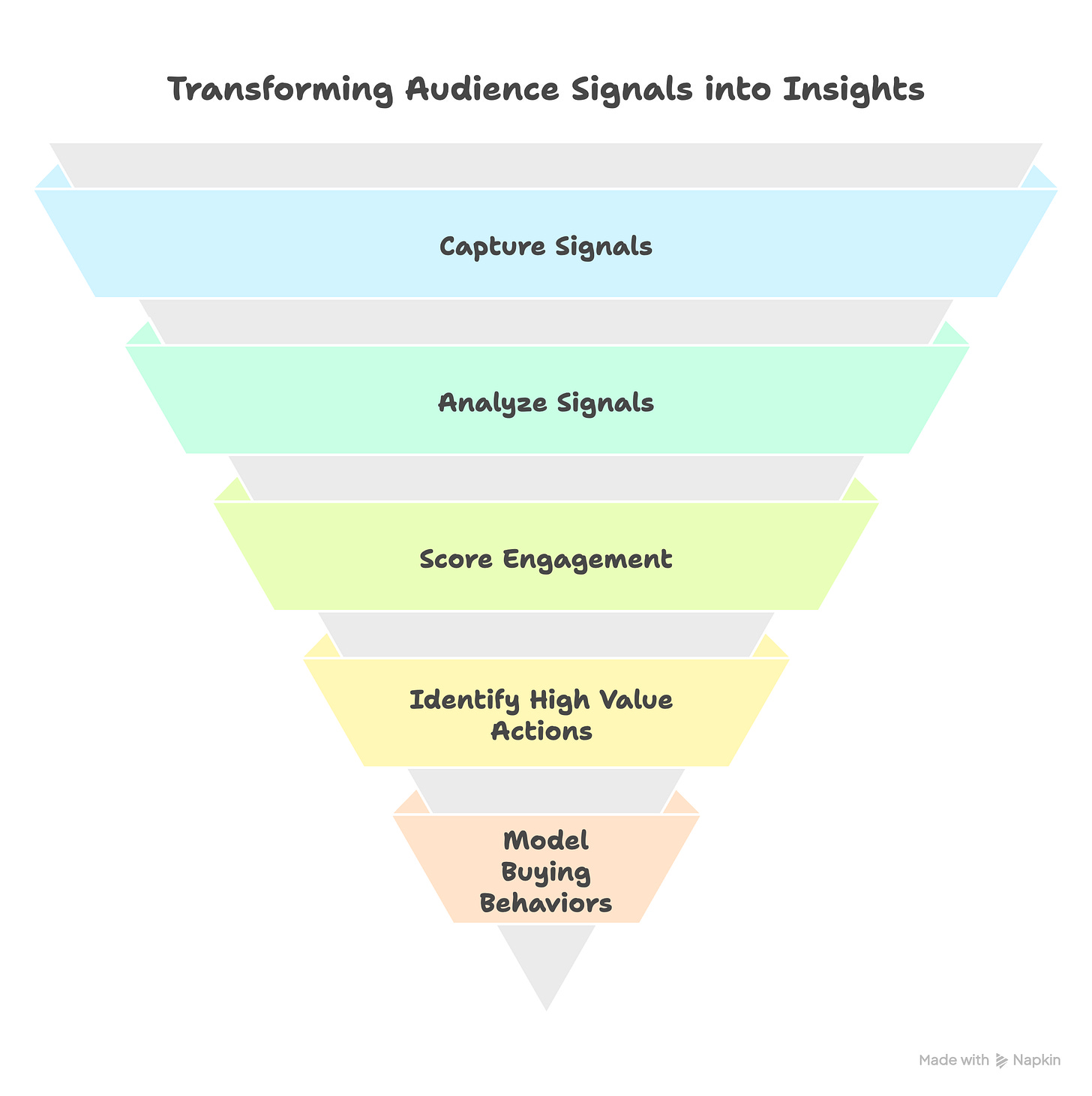

I think the whole system should start with defining the behaviours that indicate buying progress — depth of engagement, repetition, attention metrics, multi-stakeholder activity, decision-support content consumption — and you let systems observe, score, and cluster those behaviours over time. You let Machine Learning do what it does best, find order in this chaos and create scoring models which act like custom algorithms, driving adtech to deliver outcomes relevant to your business versus generic optimisations to meaningless signals like click through rates

Those clusters become your audience.

Not fixed lists.

Not frozen segments.

Temporary states.

Audiences stop being something you pick and start being something your signals reveal.

Why this changes everything

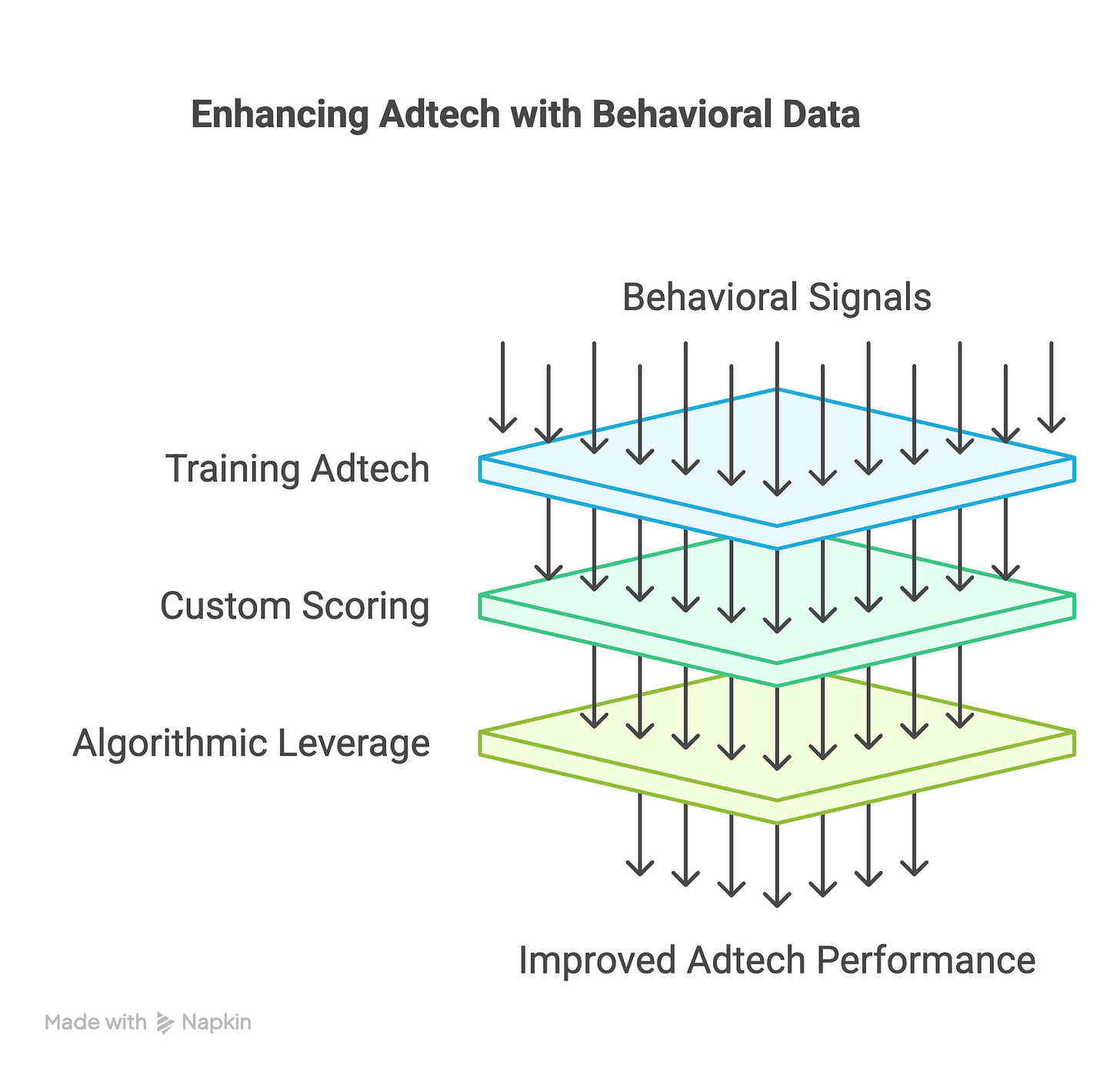

Ad buying platforms and Demand Side Platforms don’t optimise to CSV lists.

They optimise to feedback.

When you feed them static audiences and shallow proxies, you’re asking them to guess. This leads to attempting to evenly penetrate all accounts as equals, versus prioritising signals that can equate to pipeline impact. The former should be the ‘learning budget’ that platforms algos use to cast for signal, chewing up no more then 20% of the budget, with the majority of the working media being pushed into accounts that are demonstrating signals that equate to real world, modern, buying behaviours

When you feed the worlds best adtech high-confidence behavioural signals, you’re training them. When you’re feeding them custom scored behaviours which reverse map to closed accounts, you’re leveraging the adtech with a custom algo

This is the real shift underneath all the noise about AI, ABM, and programmatic evolution.

The winners in 2026 won’t be the teams with the most precise targeting. This is actually often counter-productive, because when we have identified the right accounts with the right signals, we actually often need to cast a slightly LESS precise but bigger net to ensure we maximise our odds of impacting them.

The winning teams will be those who taught their systems what good looks like and let those systems adapt in real time.

Buyer behaviour is fragmenting and becoming less predictable

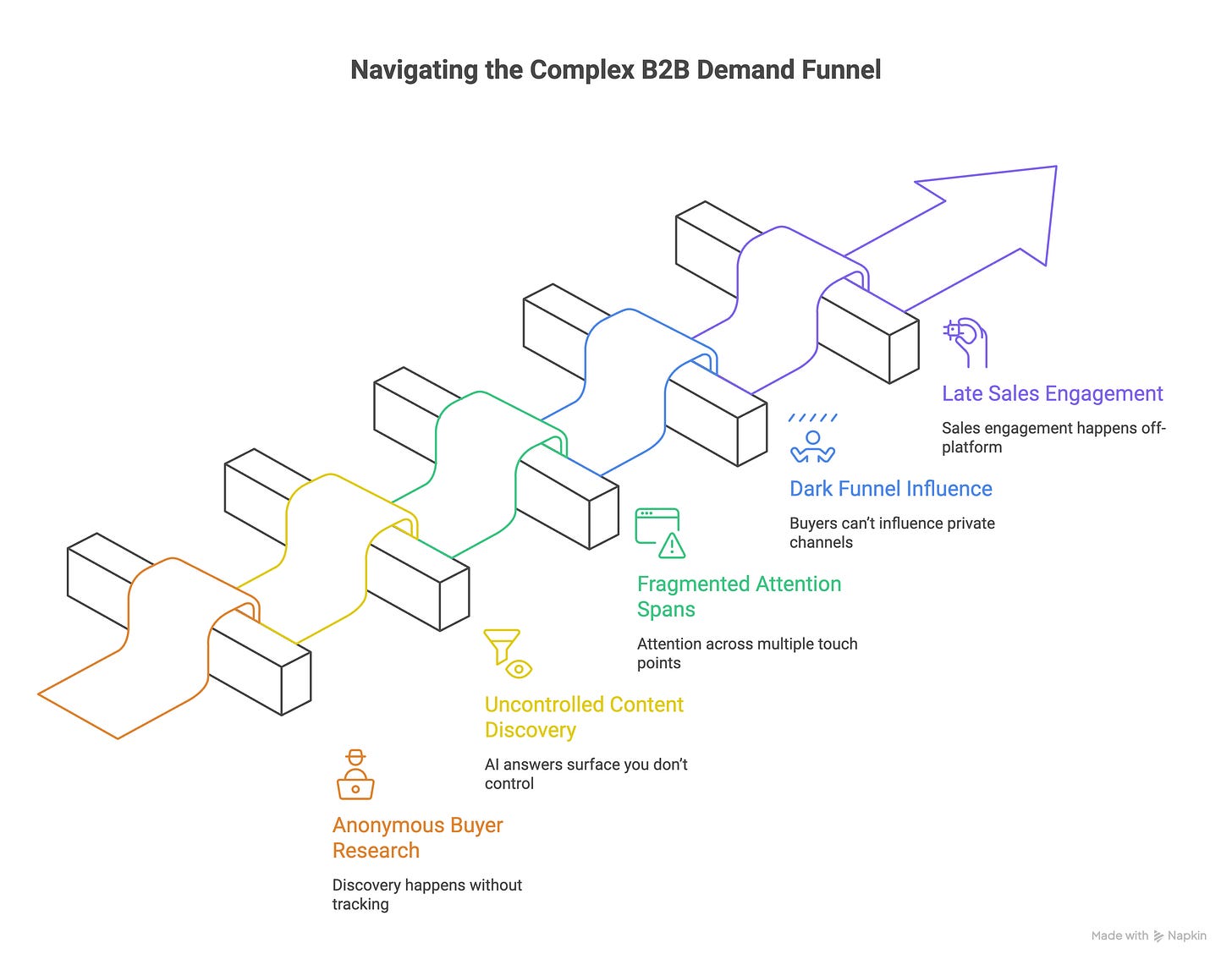

If your 2026 plan still starts with audience selection, you’re already behind. Prior to LLMs, buyer behaviour was more visible - with more vendor site visits, more publisher site visits, more transparent, trackable behaviour. Traditional search encouraged broad searches with small numbers of keywords, which funnelled traffic into optimised landing pages, and then the traffic was observable. LLM is more personalised, self learning and encourages very detailed and precise prompts, which pushes buyers deeper, faster, without leaving their footprints behind so often

Modern B2B demand doesn’t form in a straight line. It never did, but the LLM buying companion and general expanding dark funnel makes the demand incredibly difficult to follow

Buyers research anonymously

Discovery happens via agentic search, AI answers, and content surfaces you don’t control and don’t see and can’t track

Attention is fragmented across web, CTV, audio, social, and dark funnel touch points, and increasingly these dark funnel drivers from private industry Slack’s to Whatsapp groups are proving lynchpin channels that buyers can’t influence

Sales engagement happens late, and often off-platform

In that world, static targeting logic collapses.

What scales isn’t precision targeting. What scales is feedback, and the systems designed to capture it, score it andleverage it into a B2B advertising operating system

The quiet death of audience-first thinking

Audience-first thinking assumes three things that are no longer true:

That discovery is linear

Research → shortlist → engage → buy

In reality, buyers loop, stall, delegate, and resurface months later.That intent is observable at the moment you need it

Most real intent happens off-signal, in private, or before your stack can see it.That humans should stay in the loop

Manual segment curation simply can’t keep pace with signal velocity, volume, touch-points, scores and general dynamism that needs to react and power the systems behind these

This is why ABM feels “hard to scale”.

Not because of data quality.

Not because of media inefficiency.

But because we’ve been optimising the wrong thing, focussing too hard on equally delivering ads into disqualified, old and redundant TAL spreadsheets rather then optimising for impact and signals which actually equate to pipeline

Campaign thinking is the real bottleneck

Most B2B stacks are still organised around campaigns:

Launch

Flight

Measure

Report

Learn, rinse and repeat

But platforms don’t learn from campaigns. They learn from patterns.

And patterns only emerge when you stop treating media as a sequence of launches and start treating it as a continuous training loop. This is the loop that can cast the net for signal, drawdown the account level engagement scoring and patterns, react, and dynamically drive the ad targeting in near realtime.

This is the pivot most teams haven’t made yet. They are busy optimising TALs quarterly and scoring even penetration

The real shift that I’m expecting to see in 2026

Here’s what’s actually changing under the surface:

From → To

Audiences → Signals

Campaigns → Feedback loops

CTR → High-Value Actions

Attribution → Training data

Media buying → System optimisation

This isn’t semantics, even if it may look like it. Lets take our cornerstone theme, if audiences are morphing into signals, is this not two of the same thing? The discerning reader may think that ‘signals’ would simply equate to audiences, making this a wooly and meaningless ‘shift’?

So lets elaborate. This shift does not mean audiences disappear. They are a cornerstone of Demand Side Platforms and how they segment clusters of targetable objects into bidding behaviours.

What it does mean is that audiences stop being manually defined inputs and start becoming emergent outputs of behaviour.

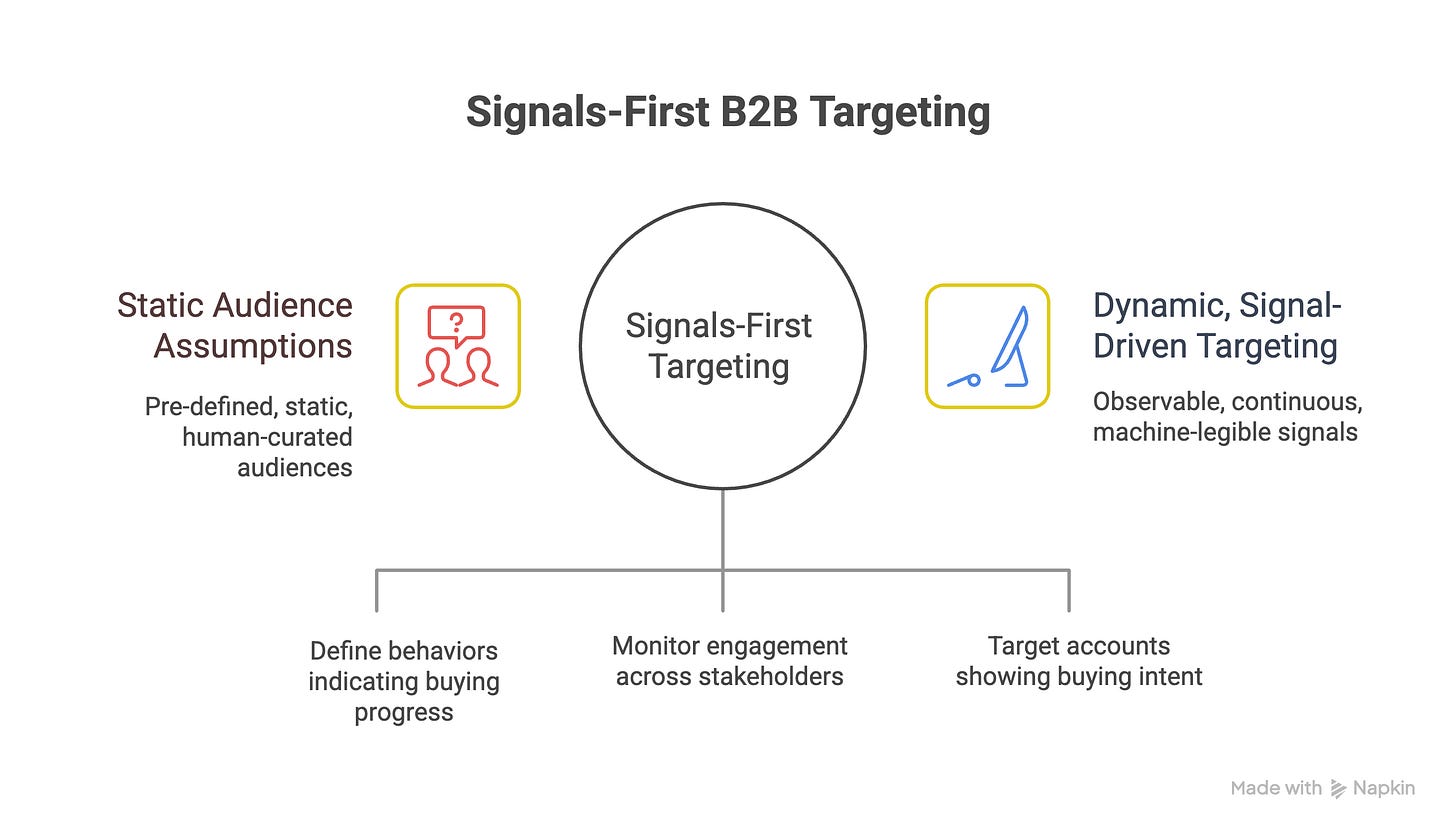

Old model: audiences as assumptions

In the traditional B2B model, audiences are:

Pre-defined, e.g. here is my 8 year old TAL of c. 2k accounts

Static, as per above, often untouched for years

Human-curated

Based on who we think might buy

You decide up front:

Which companies matter

Which titles matter

Which segments get budget

Signals, if they exist at all, are used after the fact to justify performance.

Audience first.

Signals second.

New model: signals as truth

In a signals-first world, you invert that logic.

You don’t ask:

“Which accounts should we target?”

You ask:

“What behaviours reliably indicate buying progress?”

Examples of these are really captured by the movement towards High Value Action/Engagement tracking and the genesis of what that is trying to capture. The old <2026 way was to see these emerge from the static audience assumptions, the new idea is that they feed the targeting, examples could include:

Repeated visits to implementation or security pages

Cross-session engagement across multiple stakeholders

Depth of attention, not just frequency

Interaction with decision-support content

Post-view behaviour following upper-funnel exposure

These are signals.

They are:

Observable

Continuous

Decaying over time

Comparable across accounts

Machine-legible

And crucially:

They exist before you decide who matters.

So… are signals representing audiences?

Not directly.

Signals represent behaviour. The outcome of audiences behaviours

Therefore, audiences emerge from behaviour.

Think of it this way:

An audience is a label

A signal is evidence

In 2026, systems trust evidence more than labels.

How audiences emerge in a signals-first system

Instead of saying:

“These 2,000 accounts are our audience”

You let the system infer:

Which accounts are active

Which are accelerating

Which are stalling

Which are regressing

Which are worth sales attention now

Audiences become:

Dynamic clusters of behaviour

Threshold-based (scoring) groupings

Outputs of scoring models

Temporary states, not fixed lists

In other words:

Audiences stop being lists and start being states.

Why this matters for execution

Platforms don’t optimise to audiences. They optimise to feedback.

When you feed platforms:

Static audiences

Weak proxy metrics (CTR, visits, impressions)

You’re asking them to guess.

When you feed them:

High-confidence signals

Clear success definitions

Behavioural rewards

You’re training them.

The system doesn’t need to know who the audience is.

It needs to know what good looks like.

The 2026 Mental Model

Audience thinking says:

“Find the right people, then see what happens.”

Signal thinking says:

“Observe what matters, reward it, and let the system adapt.”

That’s the leap most B2B teams haven’t made yet.

It’s a fundamental change in where value is created.

In 2026, audiences aren’t something you pick — they’re something your signals reveal. Audiences become the output of behaviour, not the input to strategy.

The new job of B2B teams

The most important questions are no longer:

“Which accounts should we target?”

“Which channel performs best?”

They’re now:

What behaviour do we reward in our algo?

What signals do we trust?

What does “sales-ready” actually mean in data?

Are we feeding our platforms truth or proxies?

This is why so much “AI in B2B” underwhelms.

AI doesn’t fix bad definitions.

It amplifies them. Ai is world class at amplifying chaos

If your success metric is shallow, your outcomes will be too, just faster and at greater scale. Yikes

Why most AI-powered B2B stacks disappoint

Here’s the part no one wants to admit:

Most AI failures in B2B aren’t technical.

They’re philosophical.

Models optimise to what you measure. Garbage in, garbage out driven by weak proxies like clicks

Platforms reward what you define as success and get you more of it, cheaper

Systems don’t understand “revenue” only signals that approximate it

If you feed:

Clicks

Cheap form fills

Early-stage noise

You don’t get better performance. You get better exploitation of bad goals.

This is why 2026 won’t be won by “better AI”. It’ll be won by better signal design.

What “training systems” actually means

Training systems doesn’t mean:

More dashboards

More models

More vendors

It means doing the hard, unglamorous work of:

Defining meaningful behaviours

Weighting them honestly

Allowing decay, recency, and context

Feeding those signals back into execution platforms

Think less target list.

More reward function.

A practical 2026 starting point

If you’re planning for the year ahead, start here:

Define 10–20 High-Value Actions that actually indicate progress

Map them to funnel stages (not channels)

Apply realistic weighting and decay

Push those signals into analytics, CRM, and media platforms

Let platforms optimise to meaning, not proxies

This doesn’t remove strategy. It forces it upstream, where it belongs.

Where this leaves ABM

ABM doesn’t disappear in 2026. Quite the opposite, we’re really talking about ABM 2.0, where the account centric thinking, which is correct, is amplified by scientific account selection and prioritisation. We have the tools to hit accounts, this is about hitting the right ones, at the right moment, across the omnichannel touch points

So “ABM” stops being the input. It becomes the output of a living system:

Accounts emerge

Engagement thresholds adapt

Priority shifts dynamically

Sales alignment improves because the logic is shared

The best ABM teams this year won’t be the most precise.

They’ll be the most adaptive.

The real takeaway

2026 is the year B2B marketing stops asking:

“Who should we target?”

And starts asking:

“What are we teaching our systems to value?”

The teams who get this right won’t just outperform.

They’ll leave their competitors for dust, snaring in market accounts before their competitors even know they’re showing intent

💬 What to Ask GPT Next

“Using the ideas in this post, help me define 15 High-Value Actions for a B2B SaaS business and map them to funnel stages.”

“Based on a signals-first model, design a simple weighting and decay framework for account engagement scoring.”

“Turn our current ABM approach into a system-training model using feedback loops instead of static TALs.”

“Help me audit our current metrics and identify which ones are proxies vs true training signals.”

“Create a 90-day roadmap to move from campaign-based optimisation to system-based optimisation in B2B.”

The inversion from audiences-as-inputs to audiences-as-outputs is where alot of ABM programs are quietly dying right now. Watched a team spend six months building a 3k account TAL, only to realize their signal data was showing actual buying behavior from ~200 accounts they'd completely ignored. The hard part isnt technical, its getting teams to accept that thier carefully curated lists might be wrong, and that decay models matter more than precision targeting. Platforms optimizing to feedback loops instead of frozen CSVs makes total sense when you frame it as training algos, not buying media. That shift alone changes budget allocation, campaign structure, and how you staff marketing ops.