Stop Calling It Intent. Start Building a Signal Stack.

From static intent to a set of signals which act like sensors, and sensors gives scores, and scores build scientific B2B targeting

Intent is a powerful word that created lazy thinking.

It implied a clean, binary truth, an account is either “in-market or not” when the truth in B2B is messy, dynamic, and probabilistic.

I was delighted to stumble on a 6Sense article that gets the pivot right: treat intent as one class of signal inside a broader signal stack, and treat your go-to-market like a sensor network: constantly sensing, fusing, and acting in near real time.

This is my argument for High Value Actions, as well as Intent and other data points. These are all signals. Signals are captured by sensors, and sensors output scores, and scores fuel tactics, TALs, bidding strategies and reporting sensitivity (urgency, action)

The deeper point: your competitive edge isn’t having more data, it’s pricing reality faster and more accurately than the market.

Read: Any competitor with a credit card can buy data, but they can’t buy these AI models

That requires: (1) clear signal classes, (2) quality scoring that respects decay and uncertainty, (3) fusion at the buying group level, and (4) a portfolio discipline that moves budget to expected value, not vanity metrics.

Buying is not linear and not individual in B2B, multiple stakeholders meander, revisit jobs, and prefer rep-free motions. Build for that. Gartner+2Harvard Business Review+2

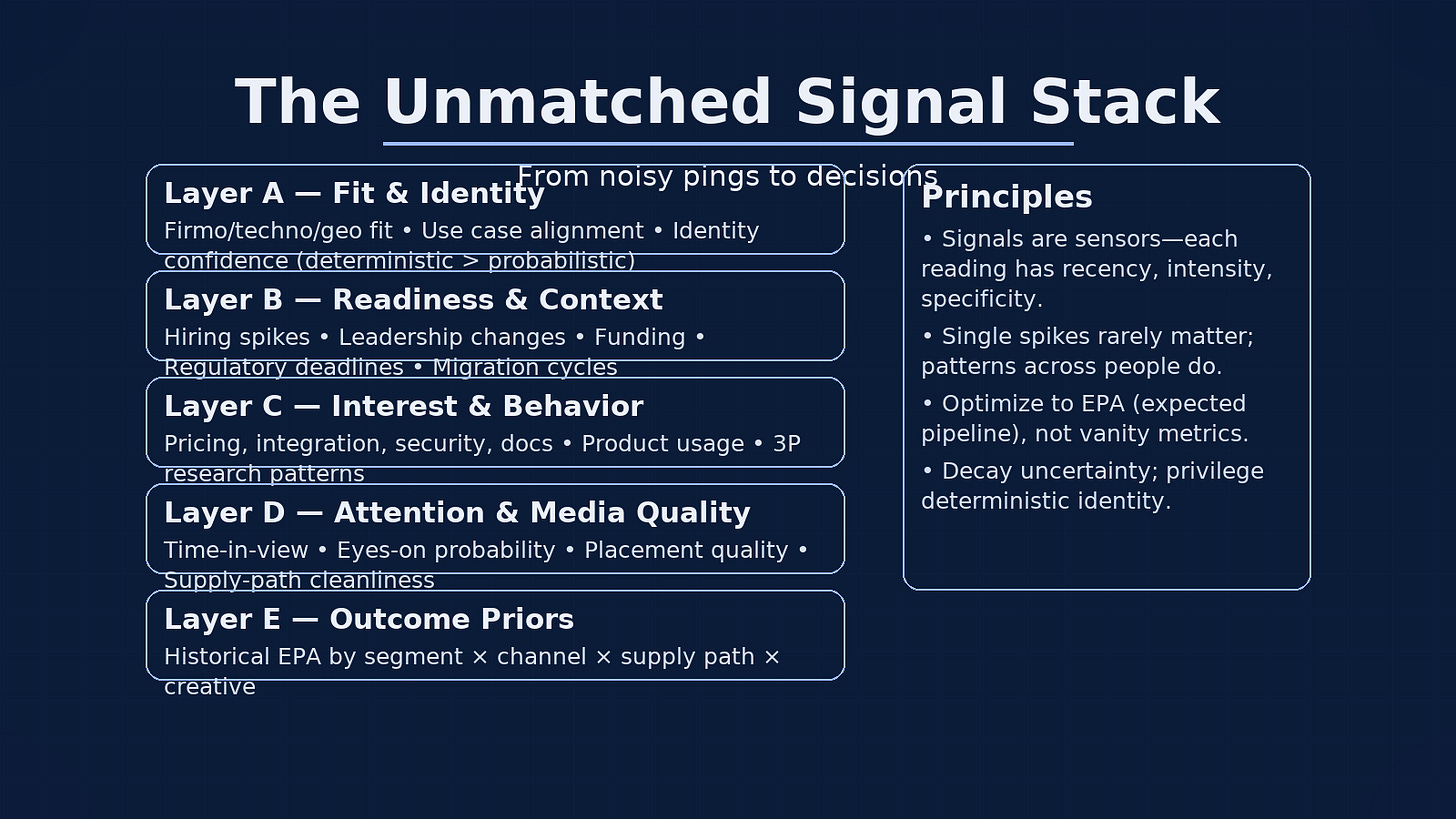

My Signal Stack (v2.5)

Layer A — Fit & Identity (Profile + Confidence)

Company fit (firmo/techno/geo), use case alignment, and identity confidence (deterministic vs. probabilistic resolution across IP, domain, location, hashed email, IDs).

Default to deterministic when deciding who; let probabilistic widen your situational awareness, but decay it faster. (6sense calls this “Profile,” which hardly changes, but remains foundational.) 6sense

Read: this model uses fit, intent and behaviour to create the best in class account scoring model

Layer B — Readiness & Context (Market Conditions)

Hiring spikes, leadership changes, funding and regulatory triggers, migration cycles and much more represent signals that change timing and receptivity. These do not say “buy now”; they say “engage now, with this message.” Weight them for message timing and channel selection. This is best achieved with higher priority TALs and a bidding strategy that prioritises high quality engagements, attention metrics and post click efficacy against these priority accounts

Layer C — Interest & Behaviour (Intent-in-Context)

First-party high-value actions (HVAs): pricing, integration, security, docs, product usage; plus third-party research: review sites, publisher networks, syndicated content. Individually weak, collectively strong especially when spread across multiple people in the account. These first party data heavy data points CRUSH lagging indicators, so we score them higher to reflect their complete brand relevancy and accuracy. The wider value of the 2nd and 3rd party signals are reflected in their lower scoring, leting us use the signal but weigh it accordingly

Layer D — Attention & Media Quality

Viewability isn’t enough. Calibrate to attention (eyes-on probability, time in view, clutter, placement depth), which consistently tracks closer to outcomes than viewability alone. Use attention floors and attention-qualified supply paths to amplify weaker behavioral signals. adelaidemetrics.com+2WARC+2

Layer E — Outcome Priors

Historical Expected Pipeline Added (EPA) by segment × channel × supply path × creative. Priors tell you where signals have actually converted in the past so your model doesn’t overreact to shiny noise.

Principle: A single signal is rarely dispositive; patterns across people are. (6sense: “None are reliable on their own.”) 6sense

Part II - Quality Scoring: turn readings into probabilities

A signal is a reading with uncertainty. Score each reading Q(s) on six dimensions, then decay it:

Q(s) = w1*Recency + w2*Intensity + w3*Specificity + w4*Agency + w5*Verifiability + w6*Coverage

Recency — seconds/hours/days; exponential decay.

Intensity — magnitude (pages, dwell, binge factor, event depth).

Specificity — closeness to HVA (pricing/docs/integration ≫ blog skim).

Agency — active human choice (search → doc) vs. passive exposure (autoplay).

Verifiability — identity confidence (deterministic > probabilistic).

Coverage — how many people in the buying group show it; multi-persona beats single-persona spikes. (6sense repeatedly emphasizes group-level patterns.) 6sense

Normalise 0–1. Keep the weights explainable. If your ops team can’t tell why one session outranked another, you’re not production-ready.

Decay: set half-lives by class—minutes/hours for media attention, days for page HVAs, weeks for readiness/context, months for profile/fit.

Below, we go into;

Moving from noisy data to clear buying group forecasting with bespoke scoring

Moneyball economics for B2B - expected pipeline added, cost per expected pipeline, revenue above replacement account with formulas and implementation

HVA ‘sensor design’ to make them perform like high value instruments

Creating a daily, weekly, monthly operating system that manages account nurture to pipeline

Attention as a multiplier linked to pipe

The playbook to make it all work