The Dark Signal Layer: Capturing What DSPs Miss

Uncovering the invisible data layer that separates vanity metrics from revenue

If you’re buying media in The Trade Desk or DV360, you’re playing on infrastructure built for ,selling toothpaste and clothes. Adtech was never built for the enterprise B2B buyer, but with some clever implementation and adaption, the worlds leading DSPs’ can work for B2B buyers.

At their core, these platforms were engineered for FMCG logic: fast cycles, cheap clicks, broad reach, repeat exposure.

B2B is the opposite: long cycles, expensive decisions, multiple stakeholders, invisible research paths.

And yet most B2B marketers are still letting DSPs optimise campaigns the same way consumer brands do: on click-through rate, CPM efficiency, and viewability.

In a brutal nutshell, that’s why your dashboards look busy while your pipeline looks flat.

Why DSPs Fail B2B

Here’s the uncomfortable truth:

Clicks ≠ interest. In ABM campaigns, the people clicking are often the least valuable — students, competitors, junior staff, or irrelevant traffic.

High CTR inversely correlates with quality. The better the CTR, the worse the on-site engagement tends to be in B2B.

DSPs don’t understand committees. One enterprise deal may involve 12 people, each consuming different assets across months. DV360 won’t optimize for that.

Identity is the wrong unit. The identity layer that fuels DSPs is built around signals linking web browsers with individuals. These signals are not 1:1 with people, let alone buying teams.

So you’re left with campaigns optimised for cheap engagement, not meaningful revenue impact.

In consumer land, the DSP hooks onto transactions. This enables its ML/AI to reverse engineer which creatives, ad placements, times of the day, and audience segments drove sales. That loop works beautifully for e-commerce or any environment where a single impression can be tied directly to a credit card transaction.

But these revenue deliverables simply don’t exist in B2B. The procurement team at British Airways will not go onto Boeing’s website and purchase ten aircraft on an Amex.

That leaves B2B in limbo:

CTR becomes the proxy for intent.

Harder conversion metrics are absent.

Closing the linkage to pipeline was already difficult — and since privacy movements kneecapped attribution, it’s gotten worse.

So what’s the answer? It’s not “let’s build our own DSP.” It’s to rethink how B2B plugs into the programmatic stack.

Why Building a DSP Isn’t the Answer

Every few years, a frustrated B2B team says: “We’ll just build our own DSP.”

It sounds logical - if the pipes don’t fit, replace them. But in practice, it’s a dead end:

Batch vs. real-time. Most B2B marketers don’t have enough event volume to justify always-on, impression-by-impression optimisation. The DSP space is under a lot of strain - big names have gone and their whole model is under threat, from the push for batch to the AI explosion. They have their place, but they do not feel like an investment thesis for 2025-2028

AI pressure on DSPs. Agentic AI is already putting pressure on the DSP layer. Expect them to abstract bidding logic away from human traders and plug directly into data pipelines. Building your own DSP now is like opening a taxi company when autonomous cars are around the corner.

Liquidity & access. DSPs have the supply integrations, the seat licenses, the pipes. Building all of that from scratch costs millions and doesn’t solve your fundamental B2B data gap. These partnerships take time and money to establish, and are commodity layers

Tuned algos and API pipelines fill in the gaps whilst the hardcore infrastructure layer remains with the specialists - because 80%+ is the same for B2B as it is for B2C

A smarter play is to tune enterprise DSPs for B2B by filling the data blind spots, not reinventing the infrastructure.

Watching the Sell-Side Curation Layer

If you want to see where the puck is going, look at the supply-side curation layer. Exchanges and SSPs are leaning into packaging inventory with more intelligence: audience overlays, contextual curation, privacy-safe enrichment. Once the job of the bidder, there has been a convergence into the middle between traditional SSPs and DSPs. For about the first time in the last decade, the pendulum has swing meaningfully back towards the sell side layer.

For B2B, this creates two opportunities:

Lightweight bidding at source. Instead of always working through a generic DSP, there’s scope to plug optimisation directly into curated supply packages. Imagine a “B2B-only” curated exchange where bidding logic is embedded at the SSP layer.

Cleaning the supply chain. B2B budgets are often small compared to FMCG giants. Eliminating waste — made-for-advertising sites, irrelevant geos, non-business traffic - can deliver disproportionate gains. Curation is the lever.

The trick will be to balance DSP independence with smart partnerships at the sell-side.

FMCG vs. B2B: Why DSPs Aren’t Built for Purpose

Think about what FMCG needs vs. what B2B needs:

FMCG DSP logic:

Billions of impressions.

Homogeneous products (one SKU of toothpaste).

Immediate transactions.

ML trained on short feedback loops. After all, who spends 9 months (average B2B sales cycle) thinking about which toothpaste to buy?

B2B DSP needs:

Tiny, fragmented segments (target account lists of 1,000 companies, signals often <500 per account).

Multiple buyer personas in a single decision.

Long, opaque sales cycles.

Micro-signals instead of transactions.

DSPs were engineered for the former. When forced into B2B use cases, they default back to CTR and reach-based logic — metrics that don’t map to pipeline impact.

The Smarter Model

The smarter model isn’t to build a subpar homebrew DSP. It’s to build the missing layers on top of enterprise systems.

That’s the FunnelFuel play:

Keep the scale and liquidity of platforms like The Trade Desk.

Plug in B2B-specific data, signals, and measurement layers.

Close the loop between HVAs and DSP optimisation to empower the enterprise bidder AI to lift for B2B

This way, enterprise B2B brands don’t need to compromise: they can leverage the best of the programmatic ecosystem while tuning it to their unique buying cycles.

That’s the path forward - not replacing DSPs, but augmenting them with the Dark Signal Layer.

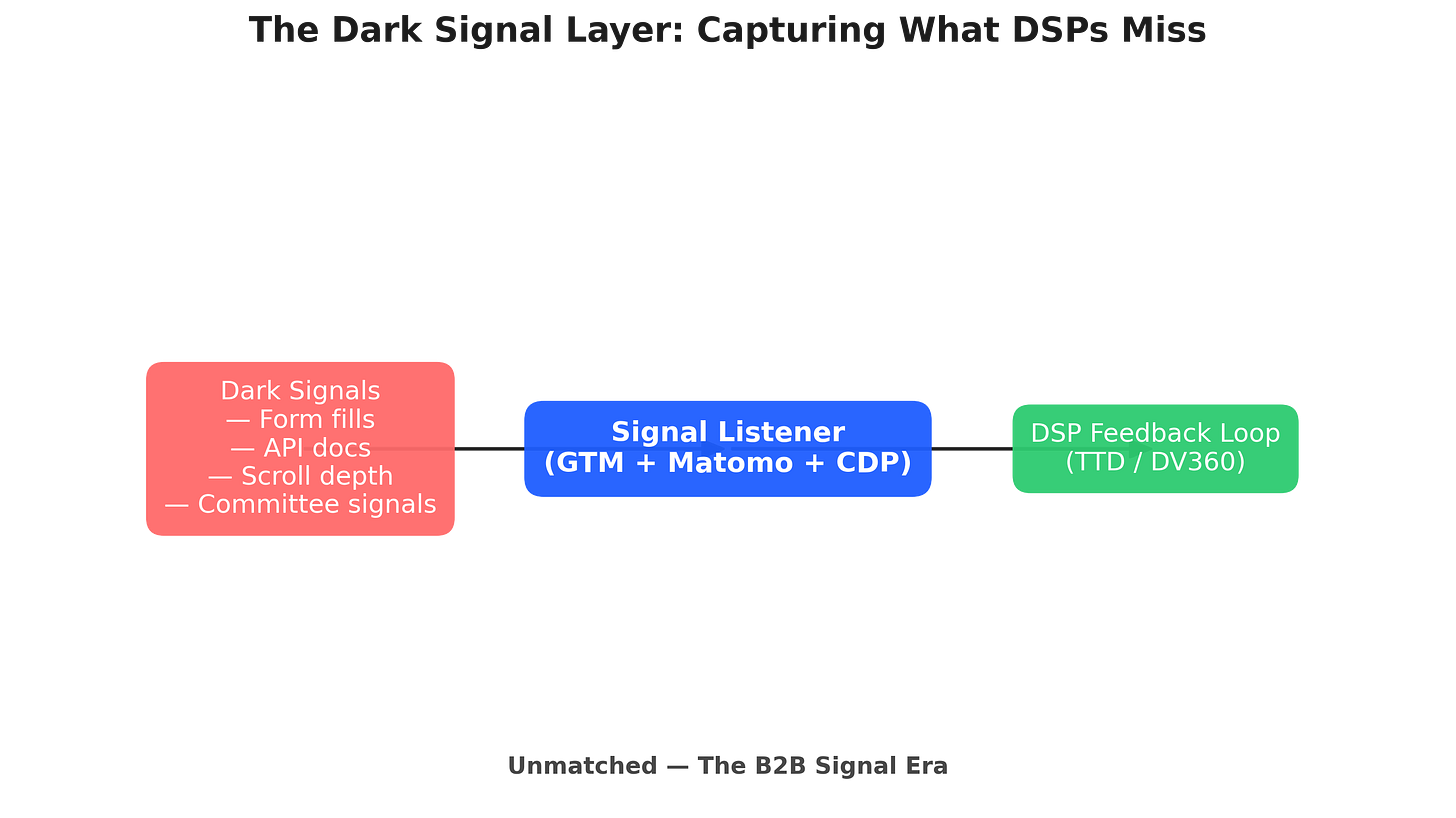

The solution lies in what I call the Dark Signal Layer: the intent-rich actions happening every day in B2B buyer journeys that DSPs can’t see — and therefore can’t optimize toward.

These signals sit below the surface of the adtech pipes. They’re hidden in web sessions, buried in forms, scattered across PDFs, and distributed among buying teams. They don’t look like “transactions” in the consumer sense — but they are the strongest early predictors of pipeline.

What Makes a Dark Signal

1. Form interactions (beyond the submit button).

DSPs see a click on an ad. They don’t see that a VP of IT from a target account spent 90 seconds filling out a security assessment form, abandoned on the final field, and came back three days later to complete it.

Starts, field-level drop-offs, and time on form are far stronger intent signals than a click.

2. High-value content.

In B2B, certain assets scream “buying cycle.”

API documentation, security certifications, implementation guides, ROI calculators — these aren’t casual reads. They’re proof that technical evaluators or procurement stakeholders are engaged.

DSPs can’t distinguish between someone browsing your blog and someone diving into your integration guide — but your pipeline can.

3. Micro-attention.

Scroll depth, dwell time, repeat visits from the same account — all ignored by DSP optimisation.

These micro-signals matter: if someone spends 6 minutes on your pricing page, it’s a bigger deal than 100 anonymous banner clicks.

4. Committee behavior.

No single cookie represents a deal. The reality is multiple people from the same company, across functions, researching in parallel.

One week you might see marketing reading a case study, IT downloading the API docs, and finance scanning an ROI calculator. Together, those visits form a committee-level signal.

Why They’re Invisible to DSPs

DSPs were built to track impressions → clicks → transactions. Anything outside that flow is “dark.”

They don’t capture intra-session behavior (scrolls, repeats, dwell).

They don’t aggregate across people into account-level views.

They don’t weight different actions based on buying intent.

That’s why even the smartest AI inside The Trade Desk or DV360 defaults to CTR. It’s not that the algorithms are dumb — it’s that the signal inputs are thin. Garbage in, garbage out.

Why They Matter More Than CTR

They correlate with revenue, not vanity. A pricing page dwell is more likely to end in pipeline than a banner click.

They reflect real buyer progression. Committees move through research phases via content — API docs, ROI tools, security pages.

They build account-level intelligence. A single click tells you nothing; a cluster of dark signals tells you a buying cycle has begun.

In other words, the Dark Signal Layer is what transforms anonymous traffic into predictive pipeline intelligence.

The Challenge

The problem isn’t identifying that these signals exist. The problem is:

Capturing them consistently across sites and touchpoints.

Normalising them into a scoring model that separates “interest” from “pipeline intent.”

Feeding them back into media systems that were never designed for them.

That’s where the Signal Listener comes in — a middleware that listens to Dark Signals, normalizes them, and turns them into optimization fuel for your campaigns.

👉 The rest of this post is for premium subscribers, where I’ll walk through the exact blueprint for building a Signal Listener using GTM, GA4, or your CDP — and how to train DSPs on High Value Actions (HVAs) instead of CTR. Upgrade to our ‘expenses tier’ for $99 and get access to our tool which builds these for you - simply configure and set it up in GTM.